I just recently finished reading the book “The Sounds of Star Wars.” A lot of the techniques and record sources of Star Wars has been around for a long time but there was something very cool about having all the sound sources to play while reading about these recordings and processing techniques that were used. This book was a terrific read and I highly recommend it to any sound nerd:) Needless to say, it was very inspiring, which made me want to try and do some Star Wars design. And what better to experiment with than the light saber.

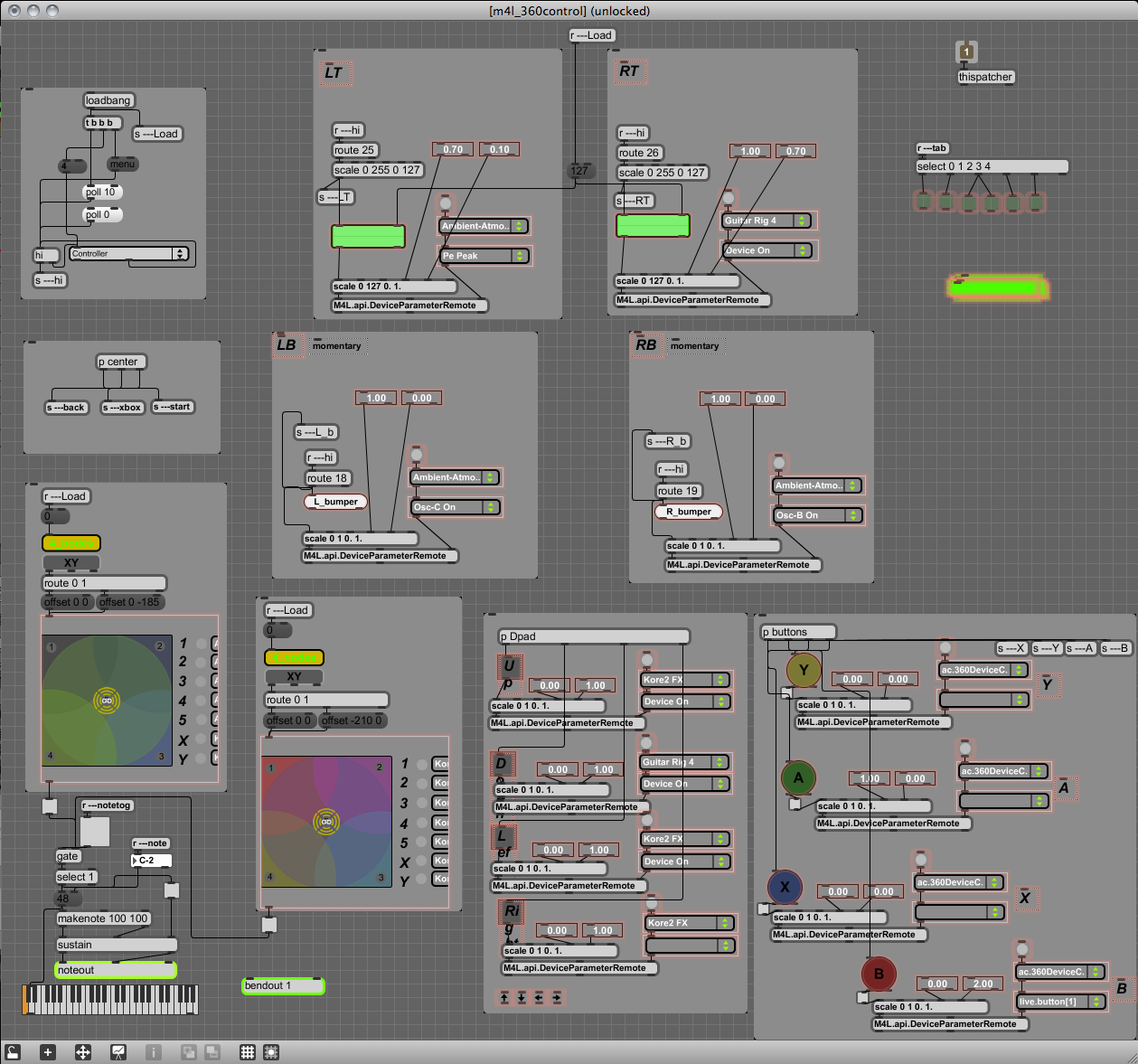

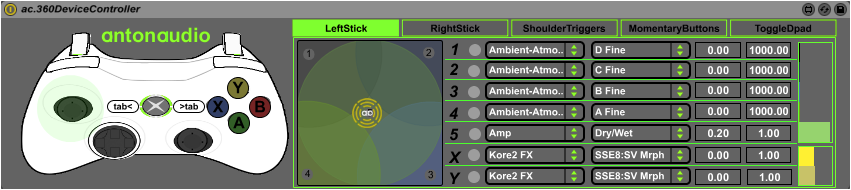

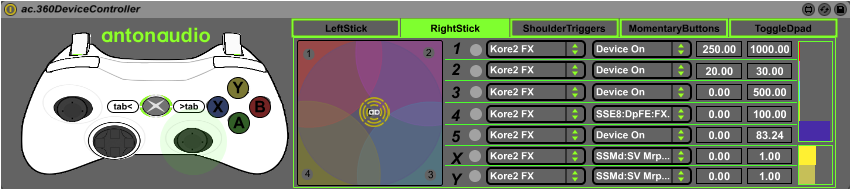

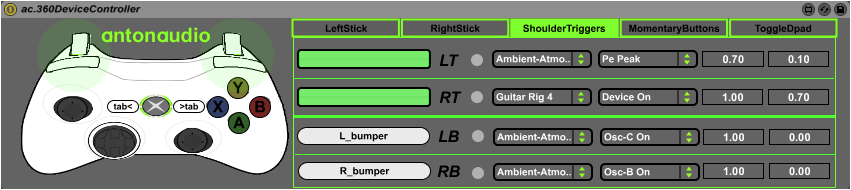

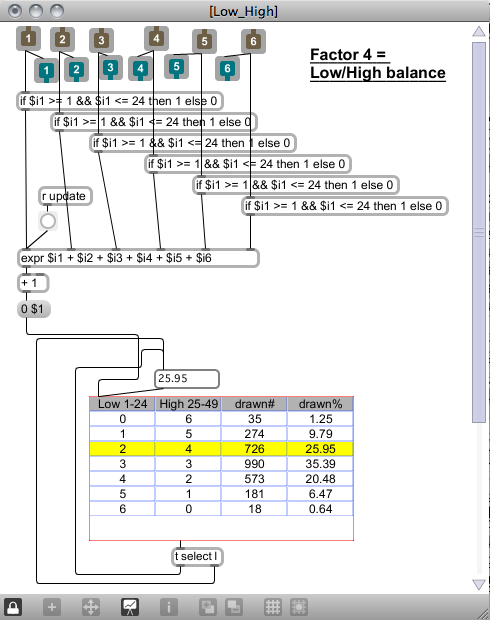

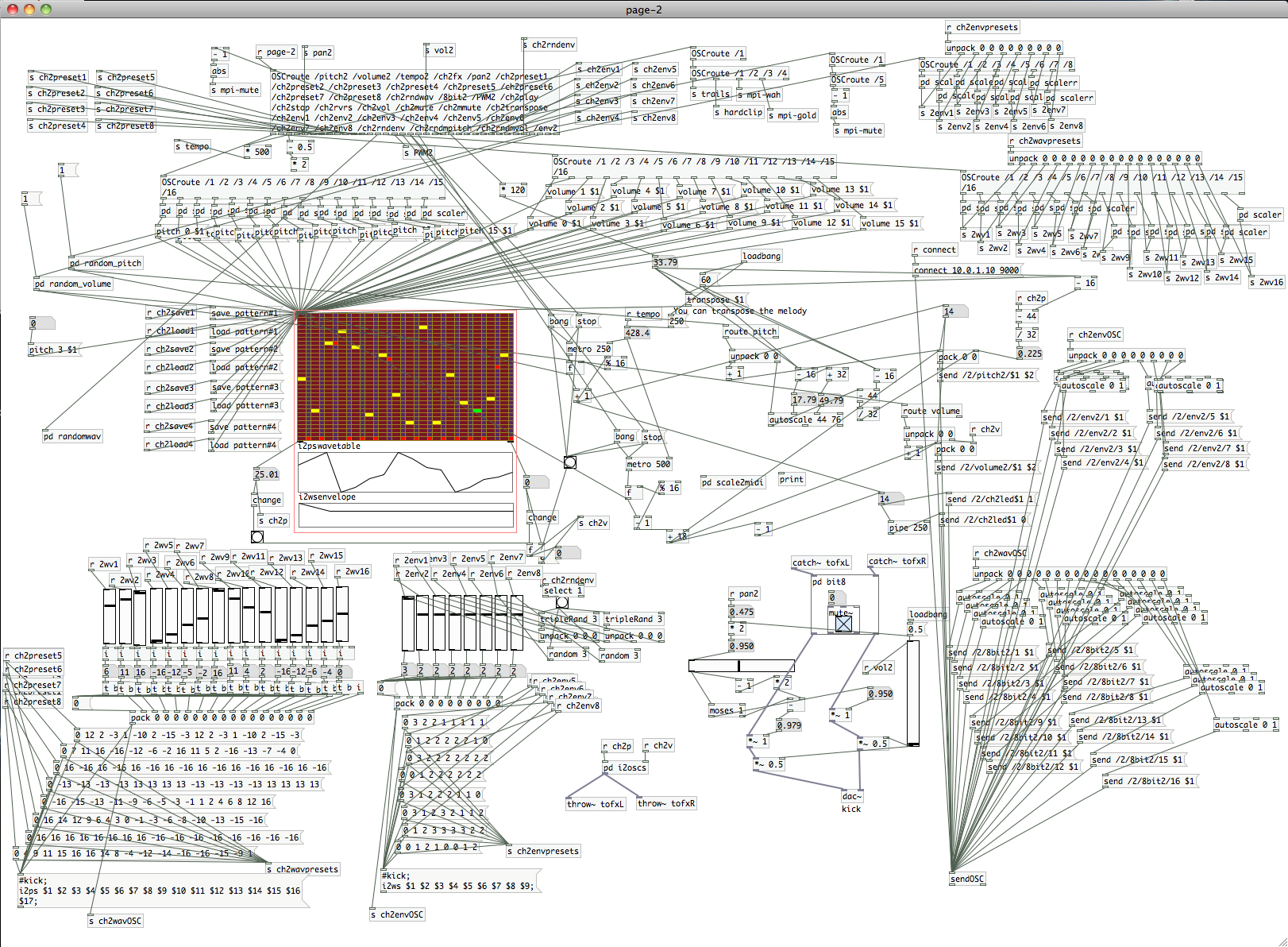

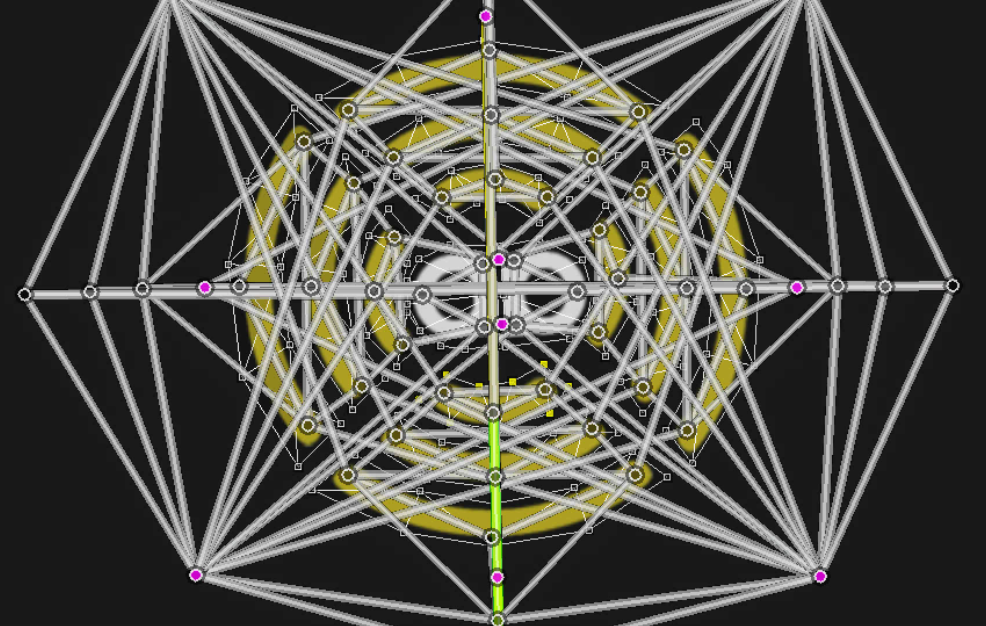

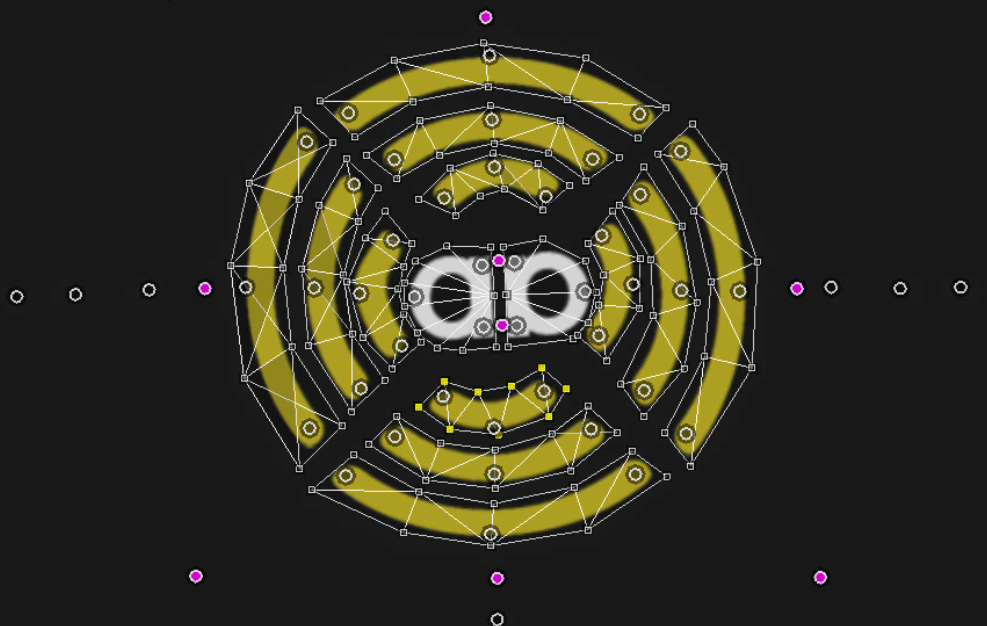

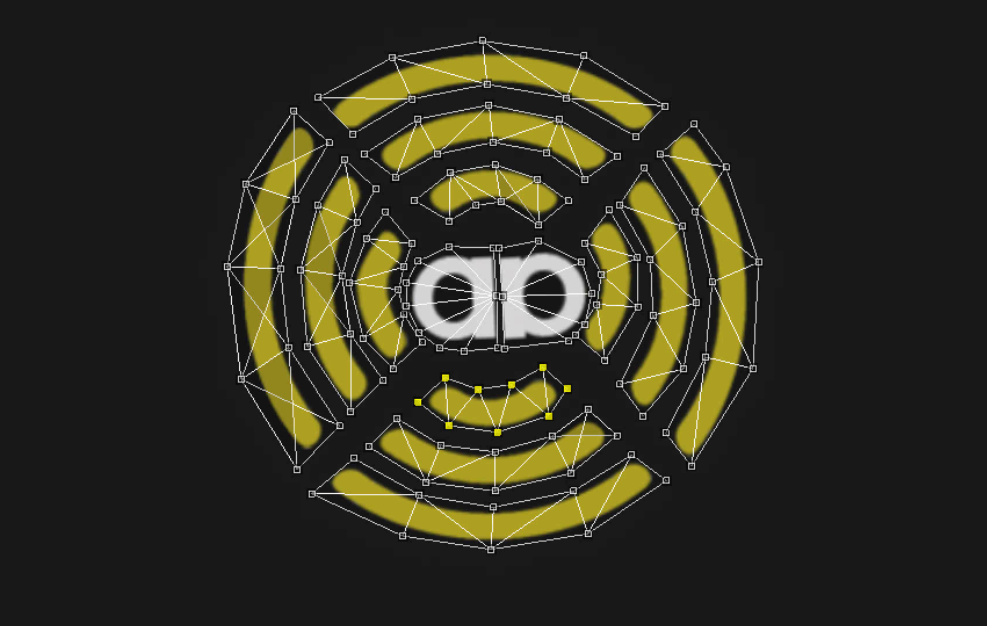

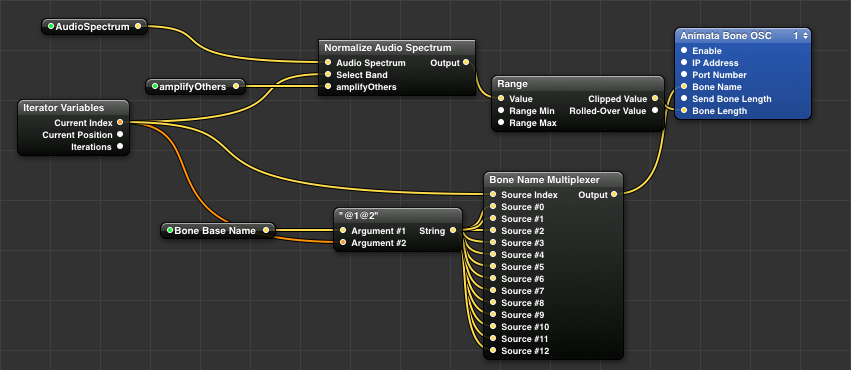

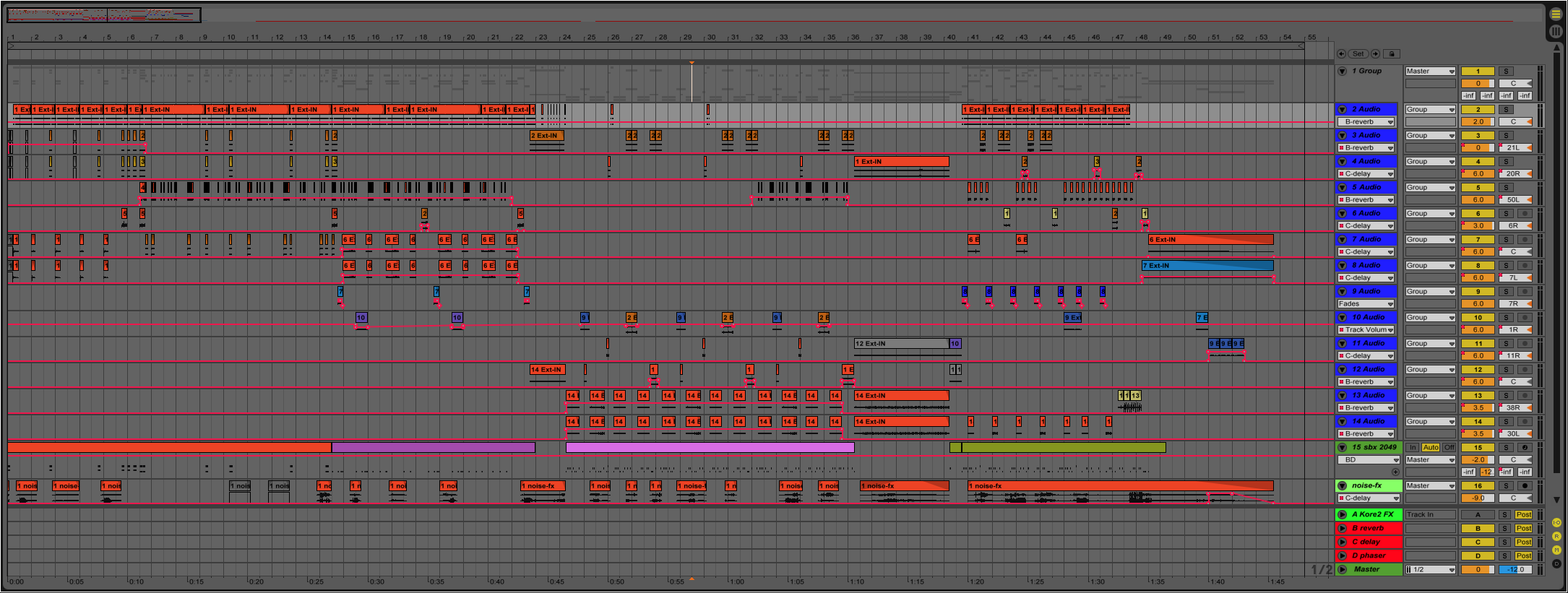

I’ve been doing a bit of OSC implementation in my Max patches so I thought i’d try and develop a loop based patch controlled by my iPhone. I recorded a whole bunch of source with my phonecoil mic and edited it into useful intro, loop and outro sounds to be triggered in my patch. Since I ended up with about 20 different sounds for each, I restricted the amount of voices and added a randomize feature to generate various different saber sounds.

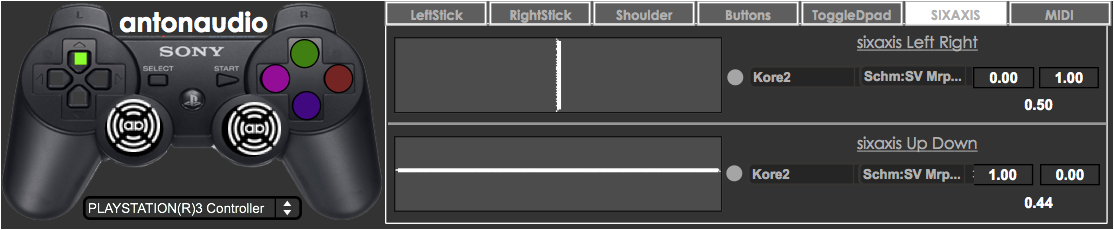

The OSC was sent using OSCemote on my iPhone. Its basically modulating various parameters of a comb filter, phase effect, and doppler using X, Y and Z values. It was nice to add this sense of ‘movement’ with actual physical movement.

I’ve captured 12 different saber variations as it was easy to keep creating a slightly different timbre with the source loops. The patch is a bit of a work in progress and I plan on making it more modular for easy prototyping using loops in this way with effects processing.