A bunch of shaders that i modified to be driven by audio or used as a canvas for an audiovisual sketch.

Hit Record = (sound + music) * (art + tech);

A bunch of shaders that i modified to be driven by audio or used as a canvas for an audiovisual sketch.

An old session designed to be able to switch elemental themes and quickly generate some content automatically. It does not cover the fine details and is more of a broad stroke approach to create some movement elements/layers.

It was also a simulation of potentially a game audio system that adapts to cinematics at runtime.

Found some really old sound design concepts/ideas on the drive and thought i’d just archive them here in this playlist:

procedural wind simulation using Pure Data

some doppler / fake doppler techniques

procedural tank engine element that continuously rises ala Shepard tone

procedural helicopter in Pure Data

some granular techniques

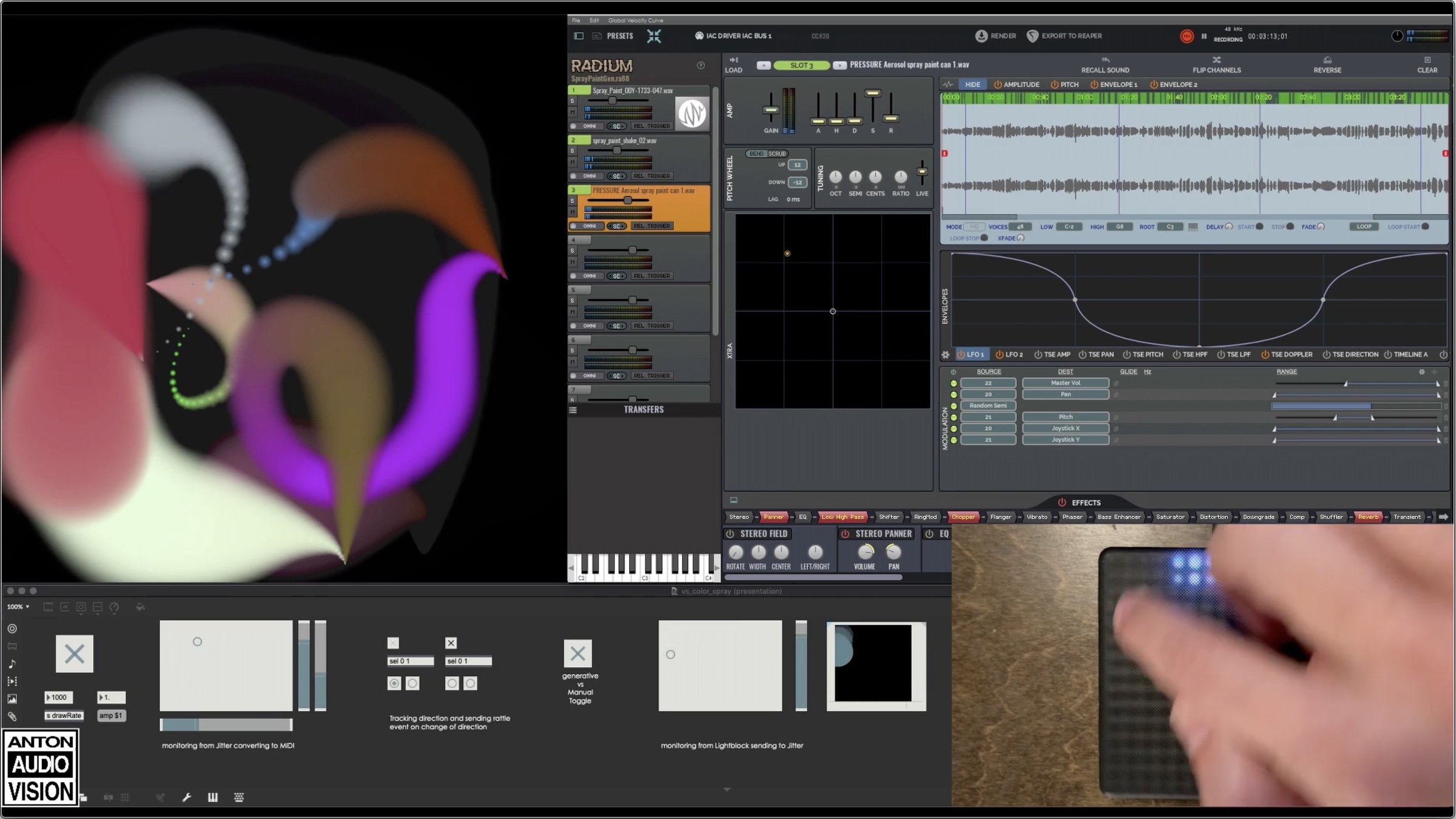

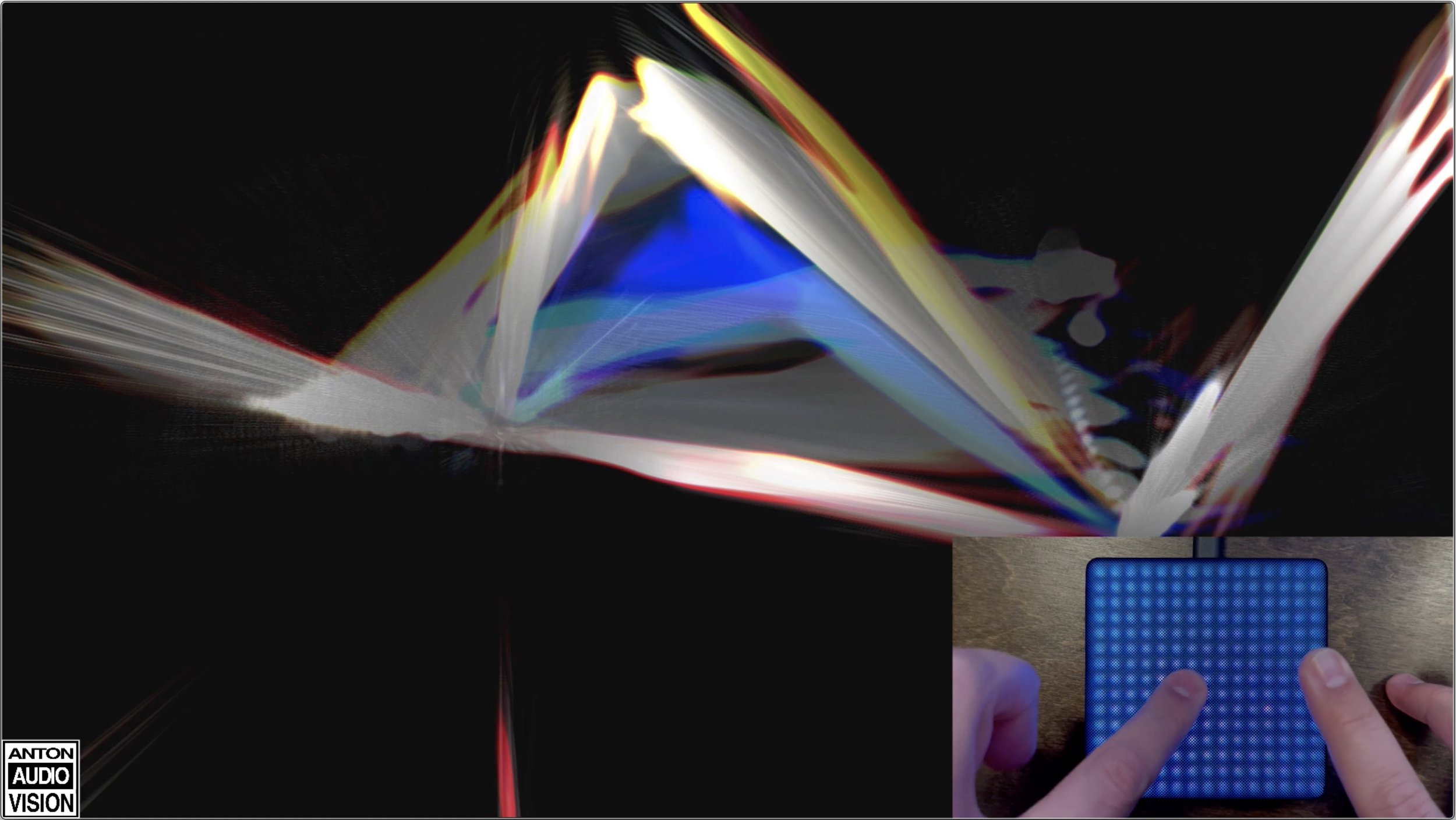

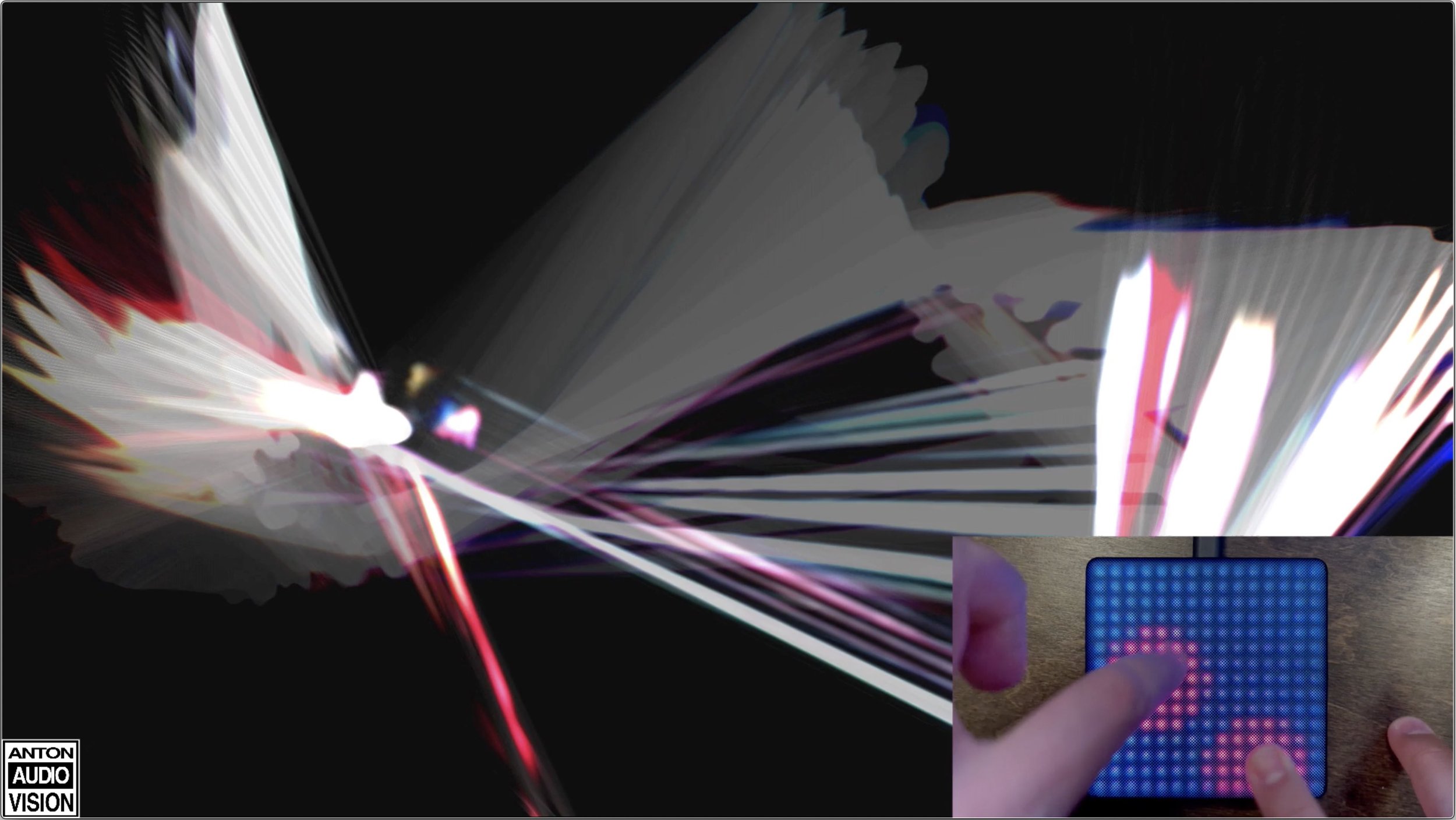

Generative SprayPaint simulator in MaxMSPJitter that controls sound realtime in Soundminers Radium sampler. Essentially exposing the generated X and Y positions and Size of spray as variables, a change in direction triggers events. Then translating to MIDI and sending to Radium sampler for sound triggering of layers and modulation.

The more manual controlled version using Roli lightblock is passing control parameters to Max over USB where i then over-ride the generative logic and manually control X Y and Size (with MPE pressure), which then gets tracked and translated to MIDI post-visual generation and sent to Radium for sound triggering of layers and modulation.

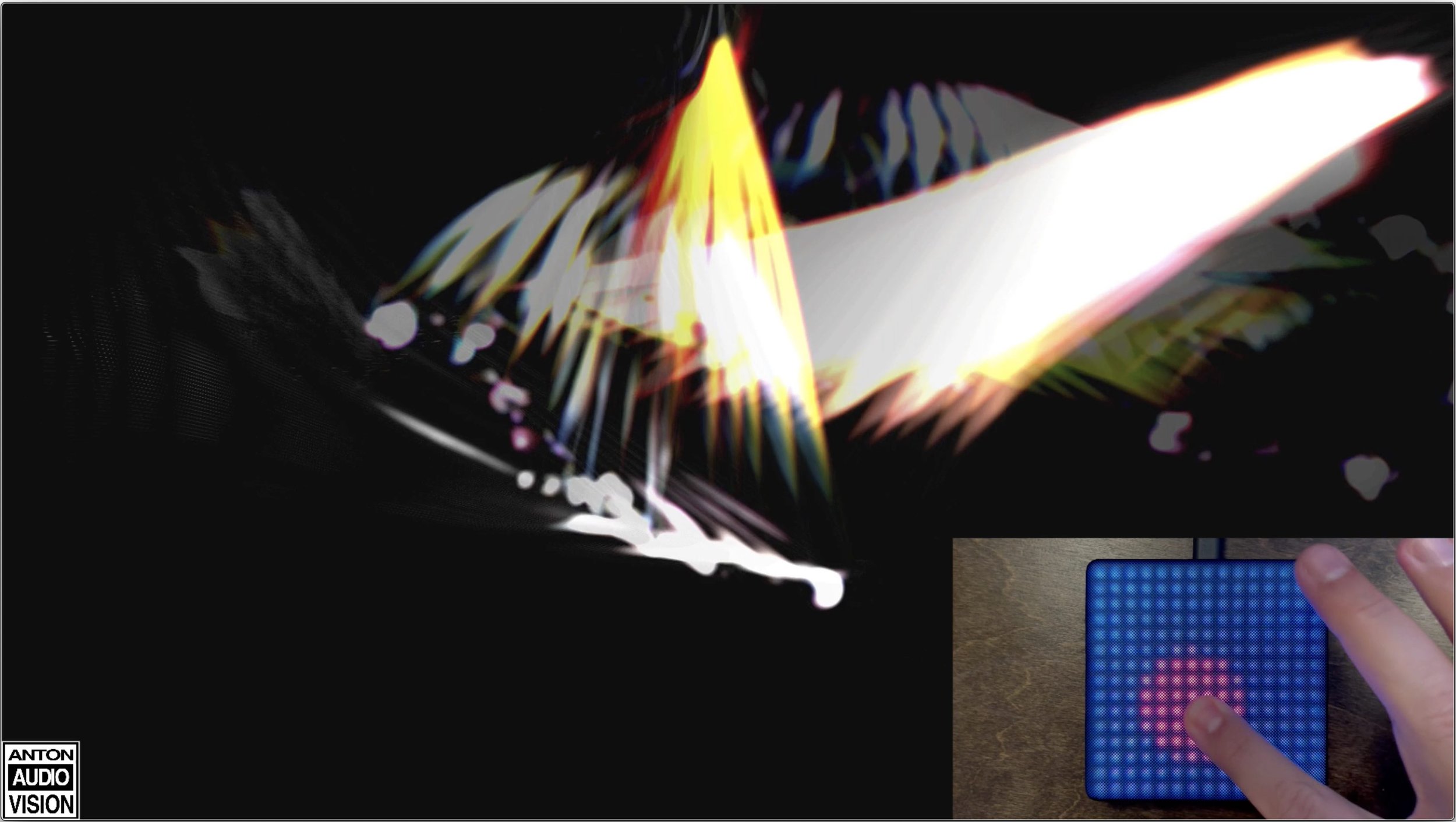

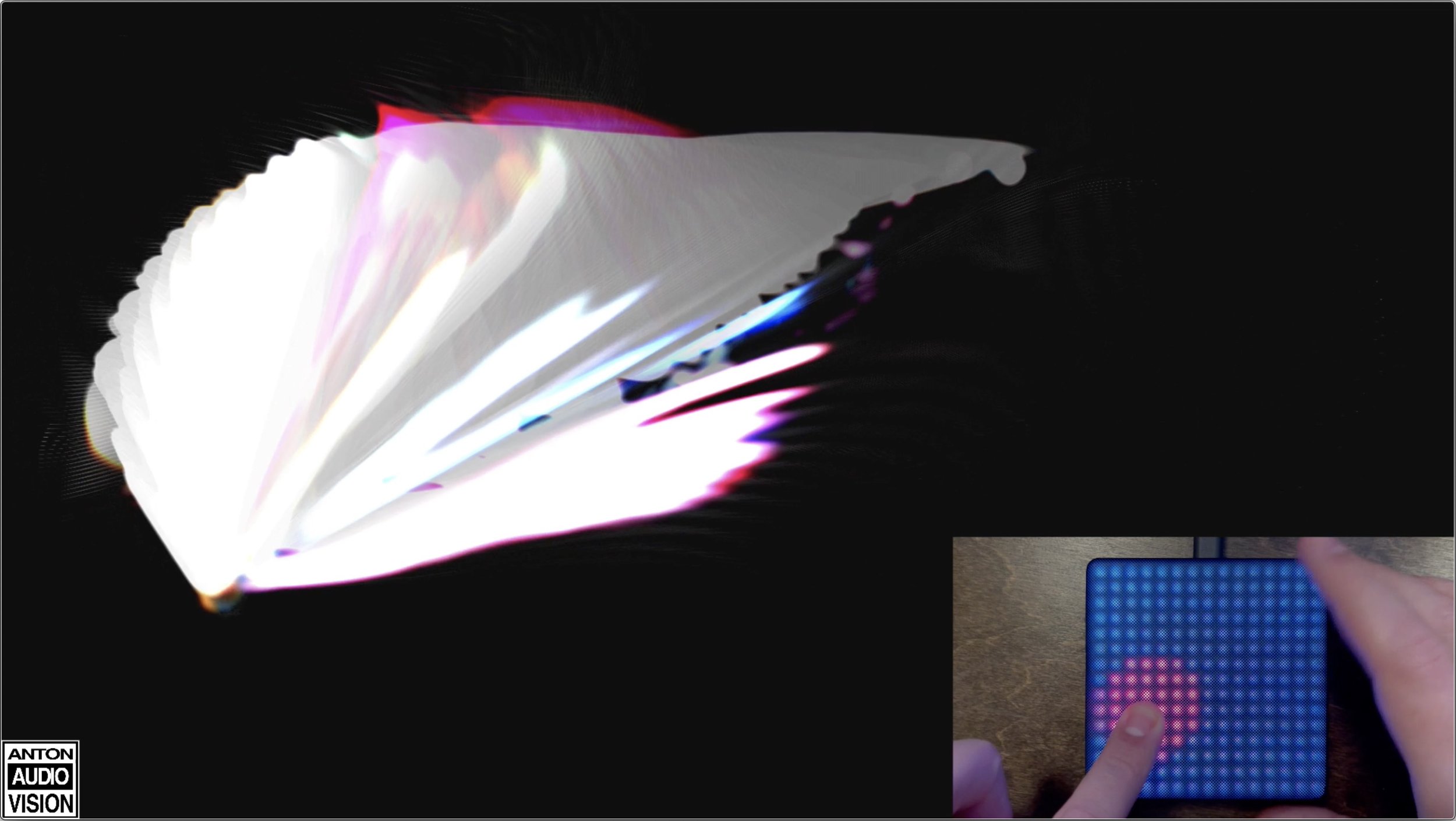

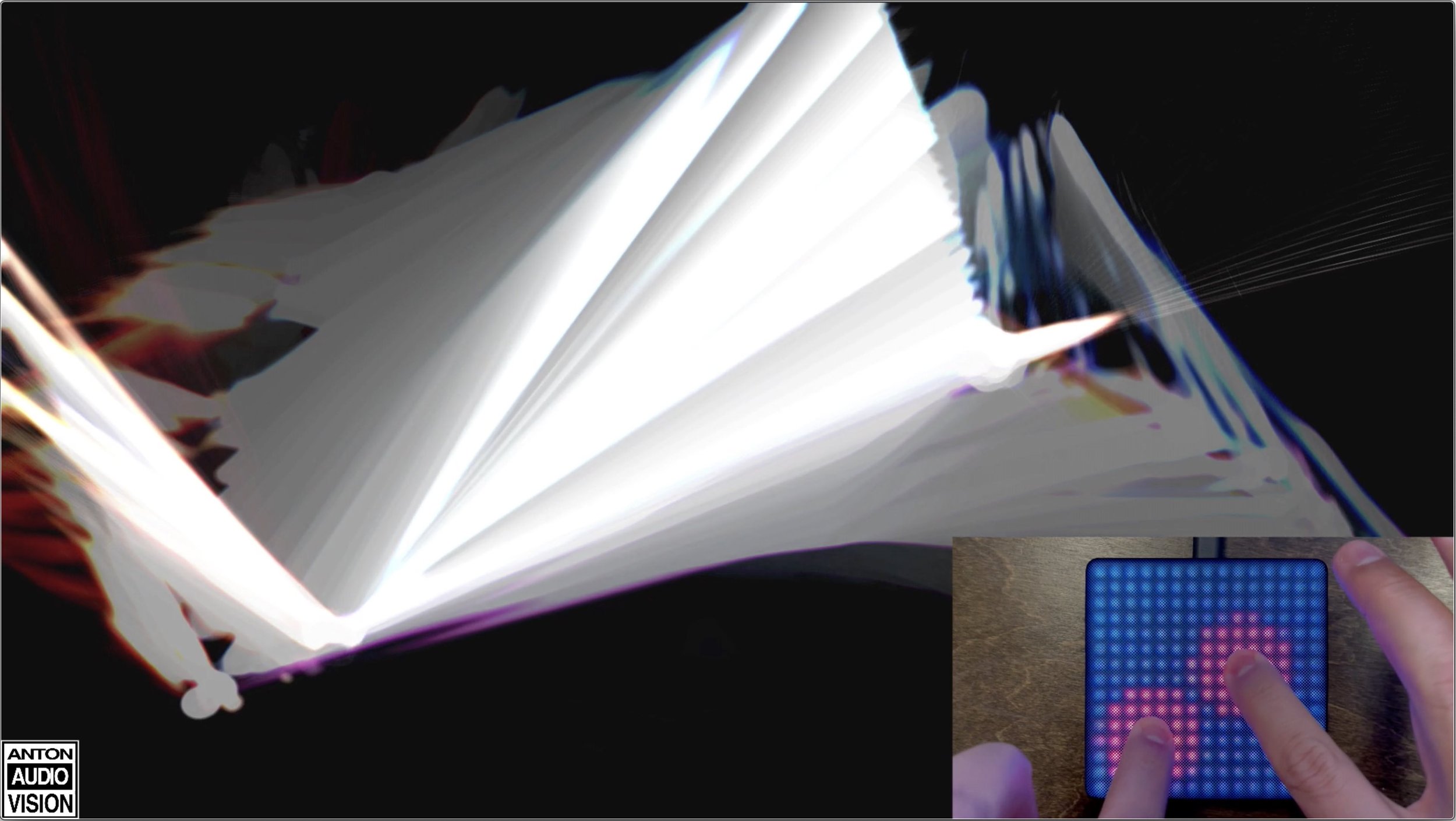

Fun session controlling visuals in MaxMSPJitter and simultaneously triggering Soundminer radium samplers and Massive synthesizer with Roli Lightblock.

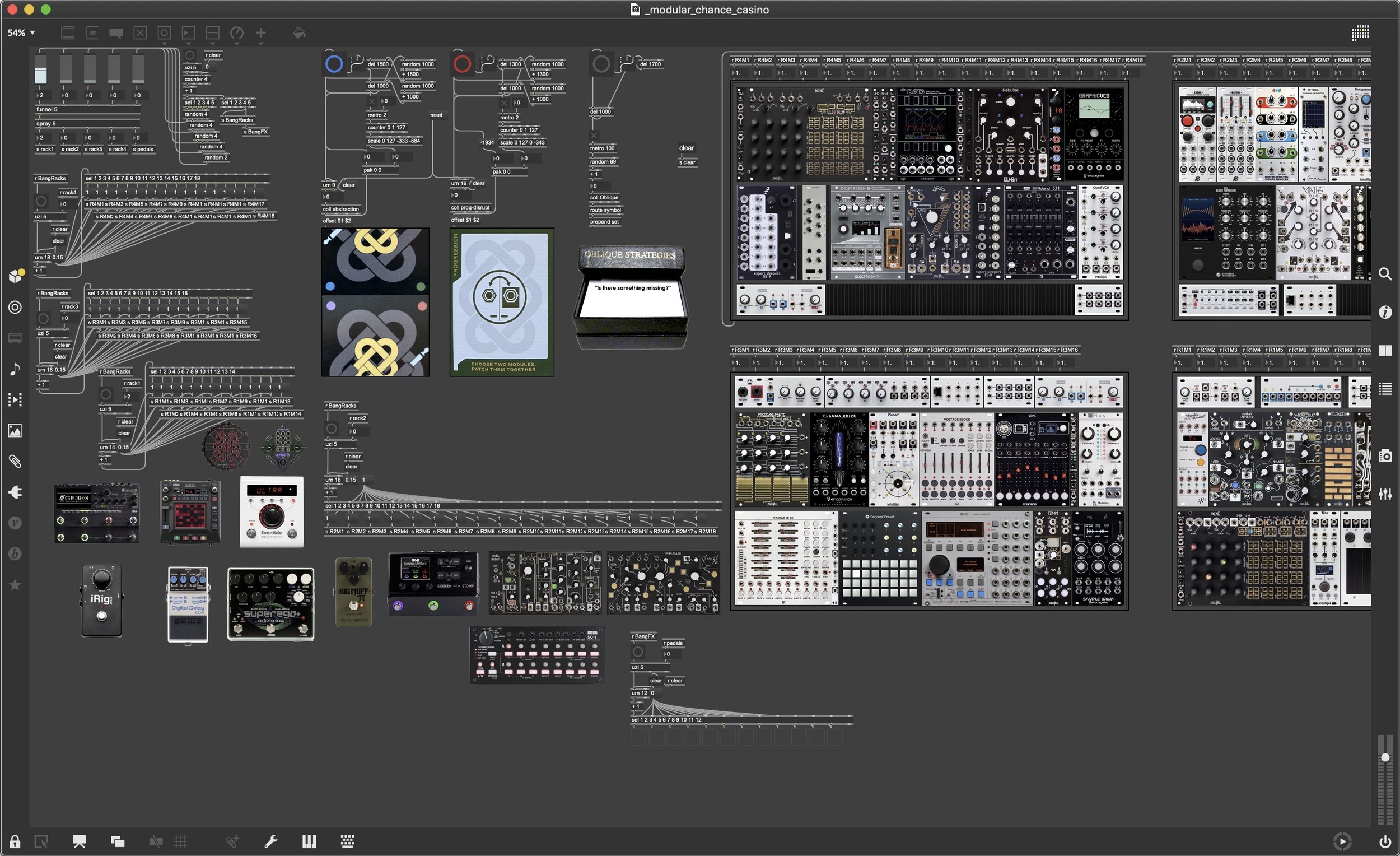

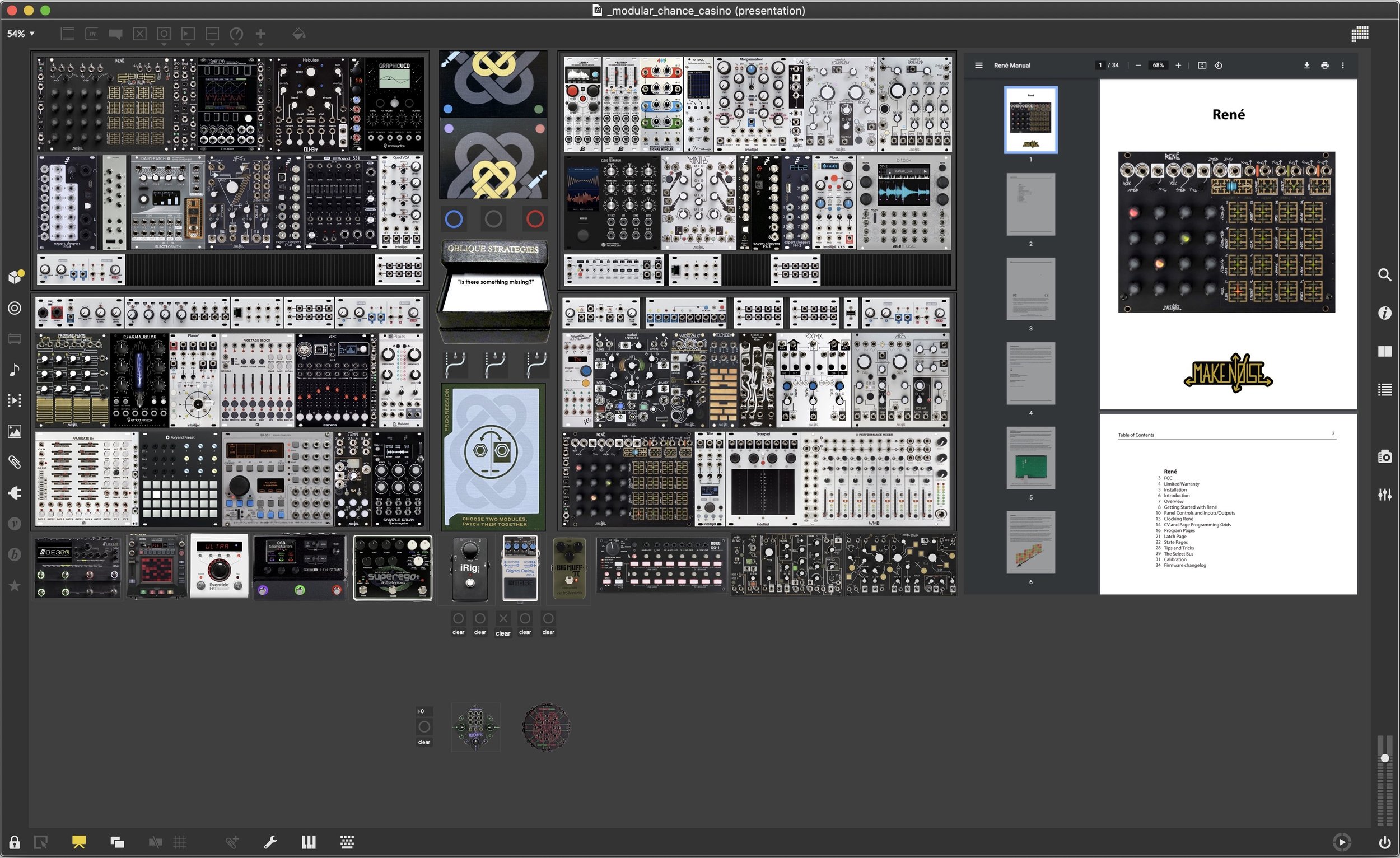

Work In Progress - Making a digital card game in Max8 that combines PATCH with Oblique Strategies and calling it my Modular Chance Casino. The idea is to promote creativity while reducing the often too many choices when playing on the modular system

Incorporating my entire system into the card instructions so when it suggests choosing X number of modules etc it will just pick them for me

Also adding live links to online documentation to refresh my memory that is full of audio documentation

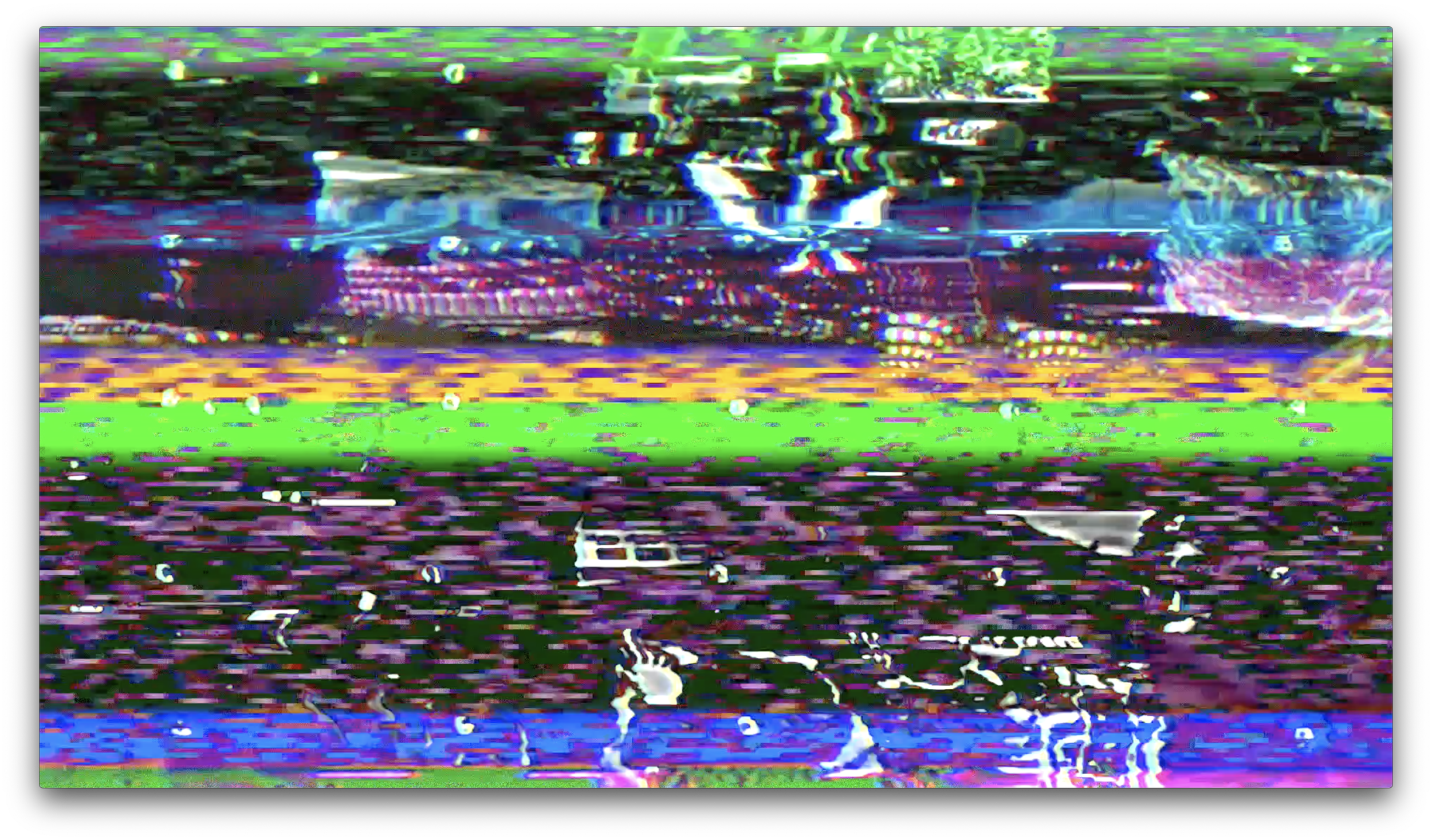

Experimenting with some video and image processing.

Generated some AudioVisual smearing displacement videos/images.

Some chill OP-Z beats in the Maui heat from the vacation late last year with added Ukulele sent through Microcosm accompaniment

Cheers for tuning into the antonaudio frequency on your internet dial📻

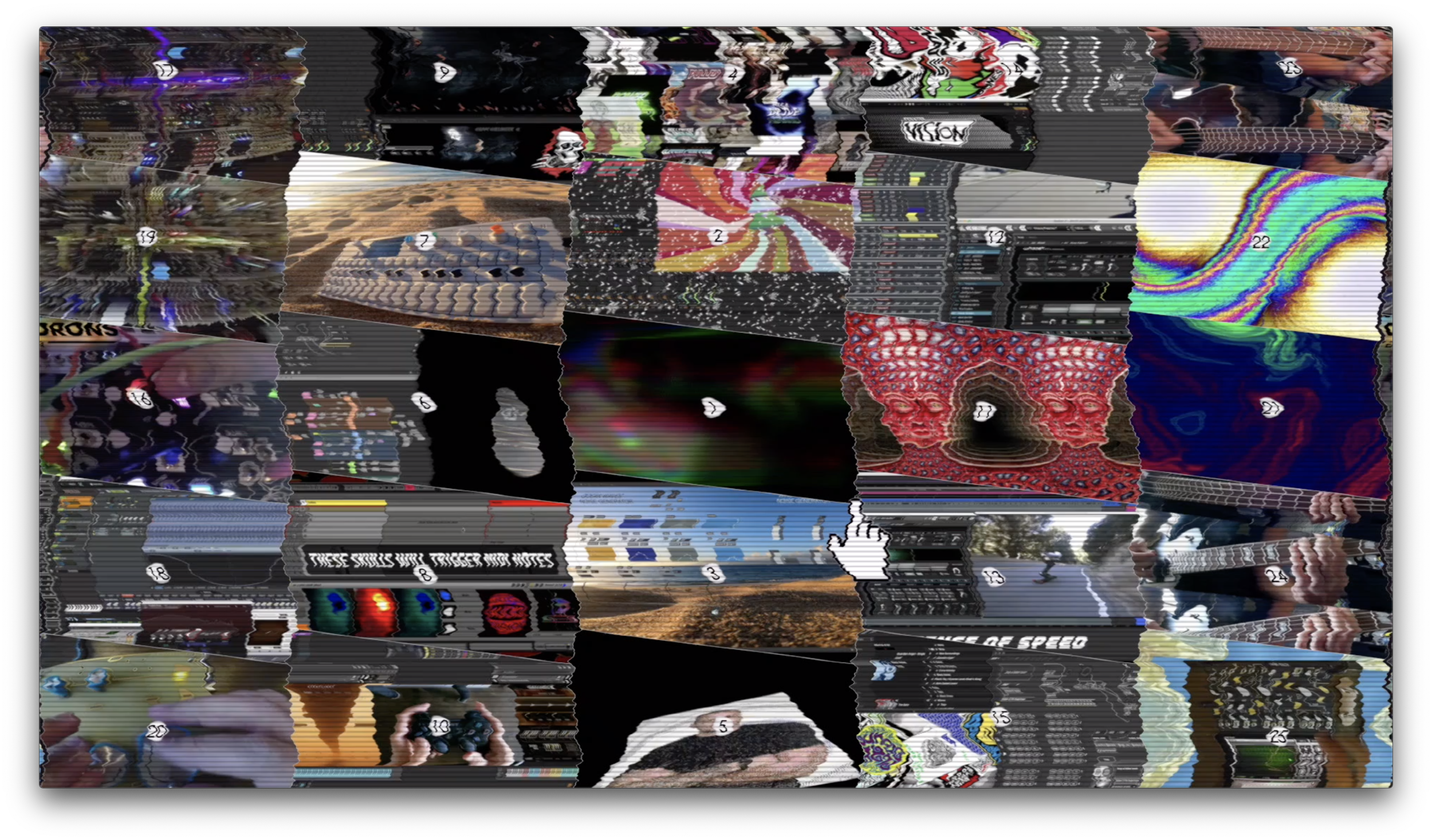

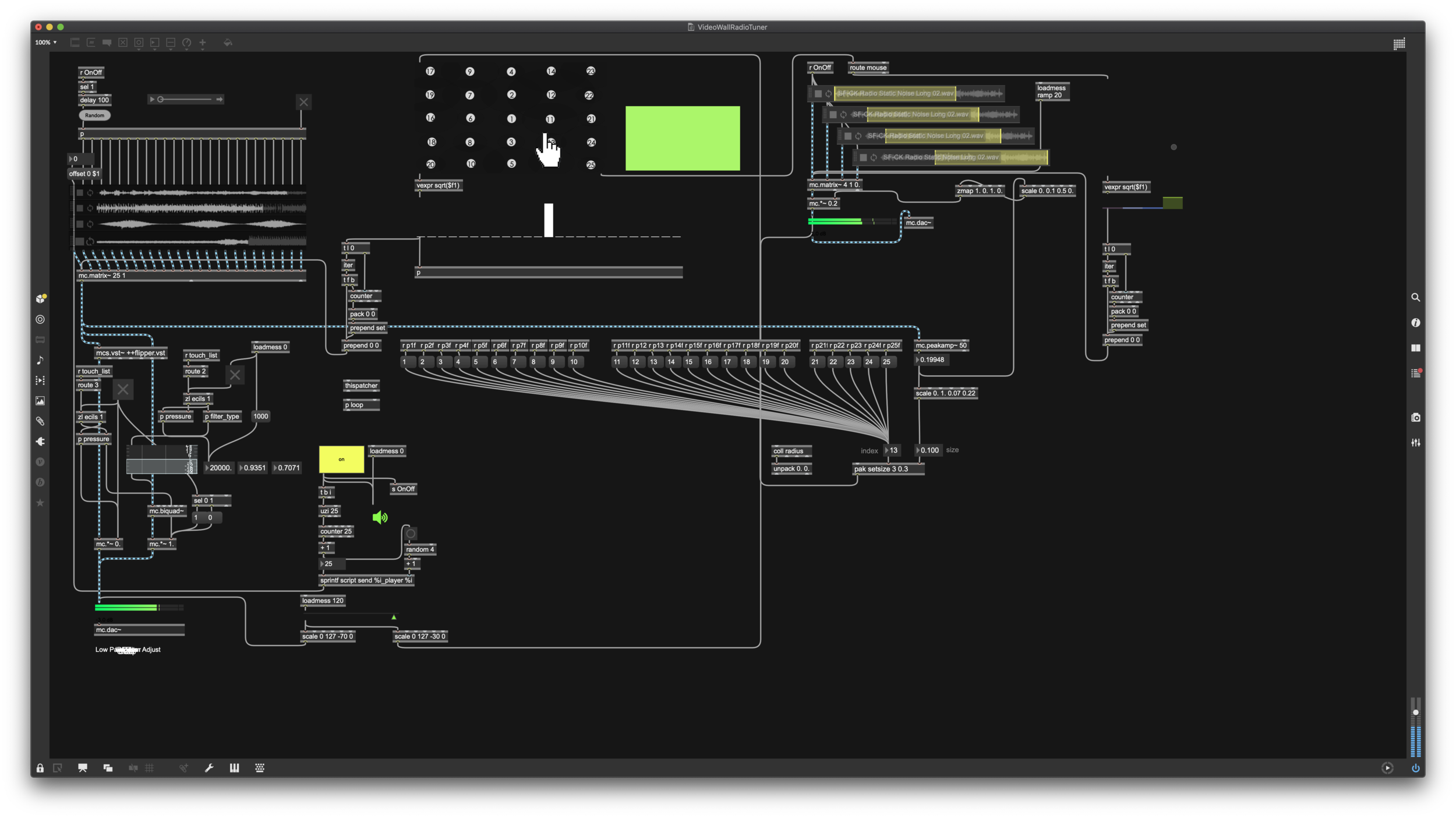

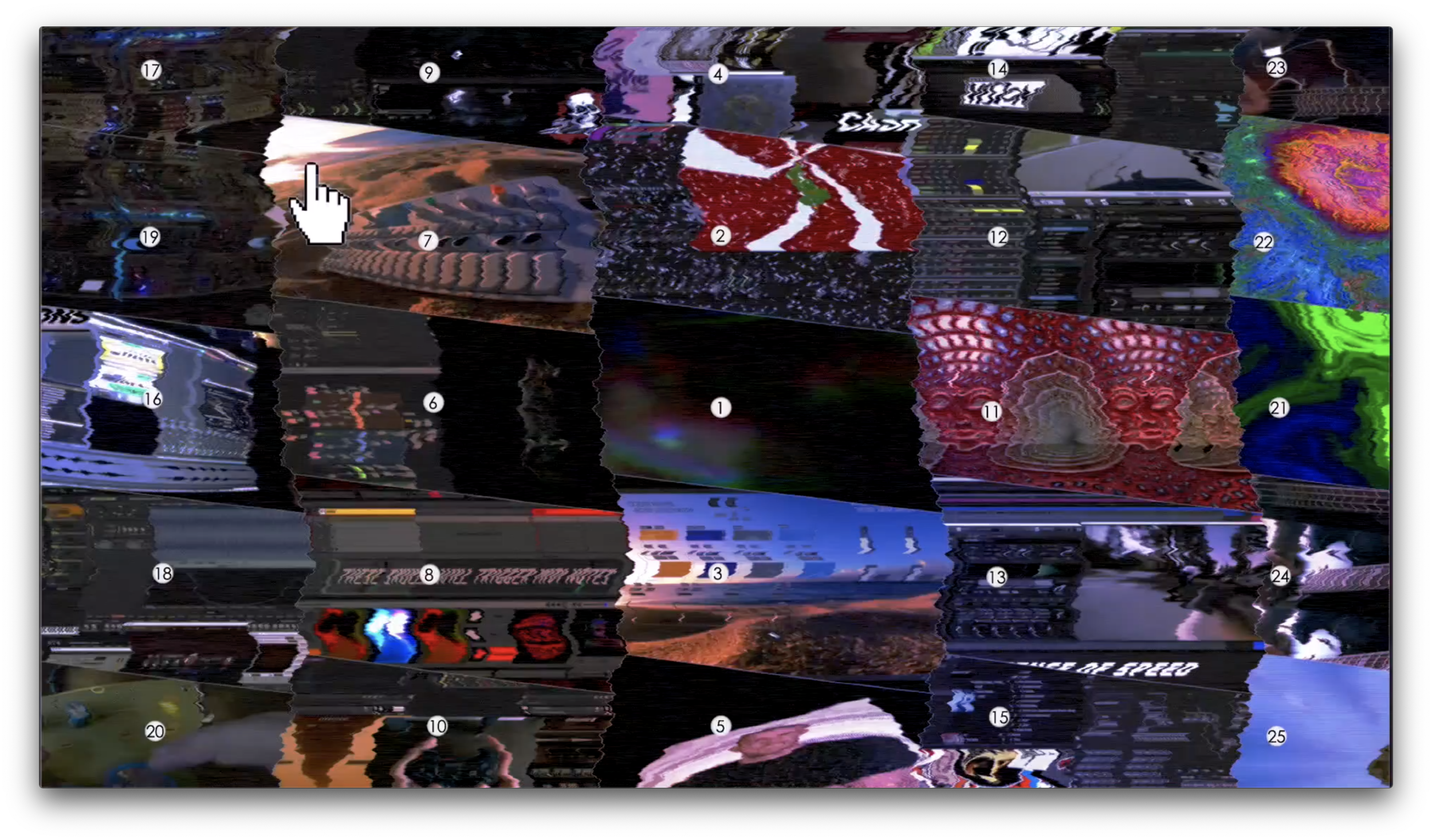

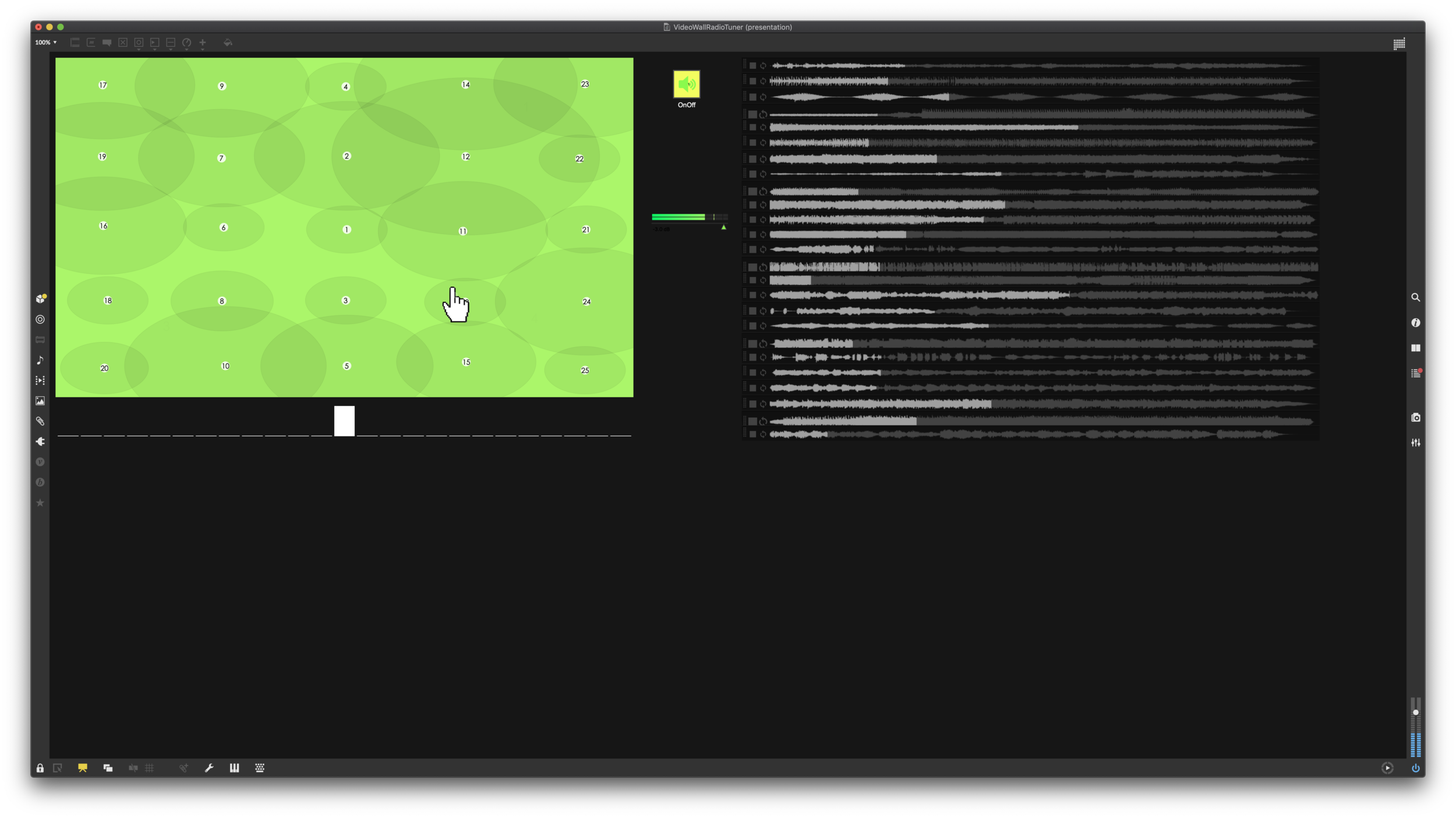

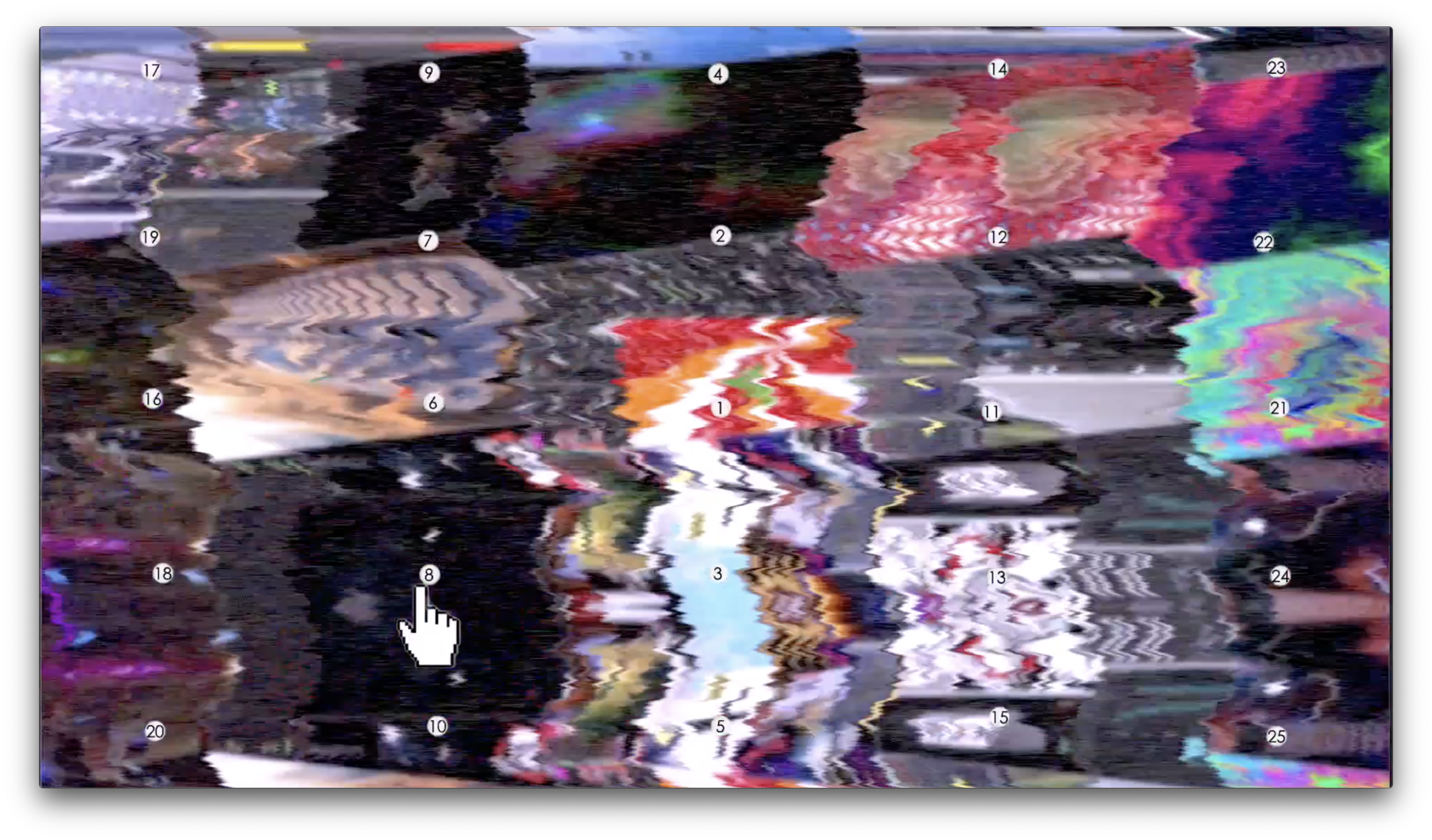

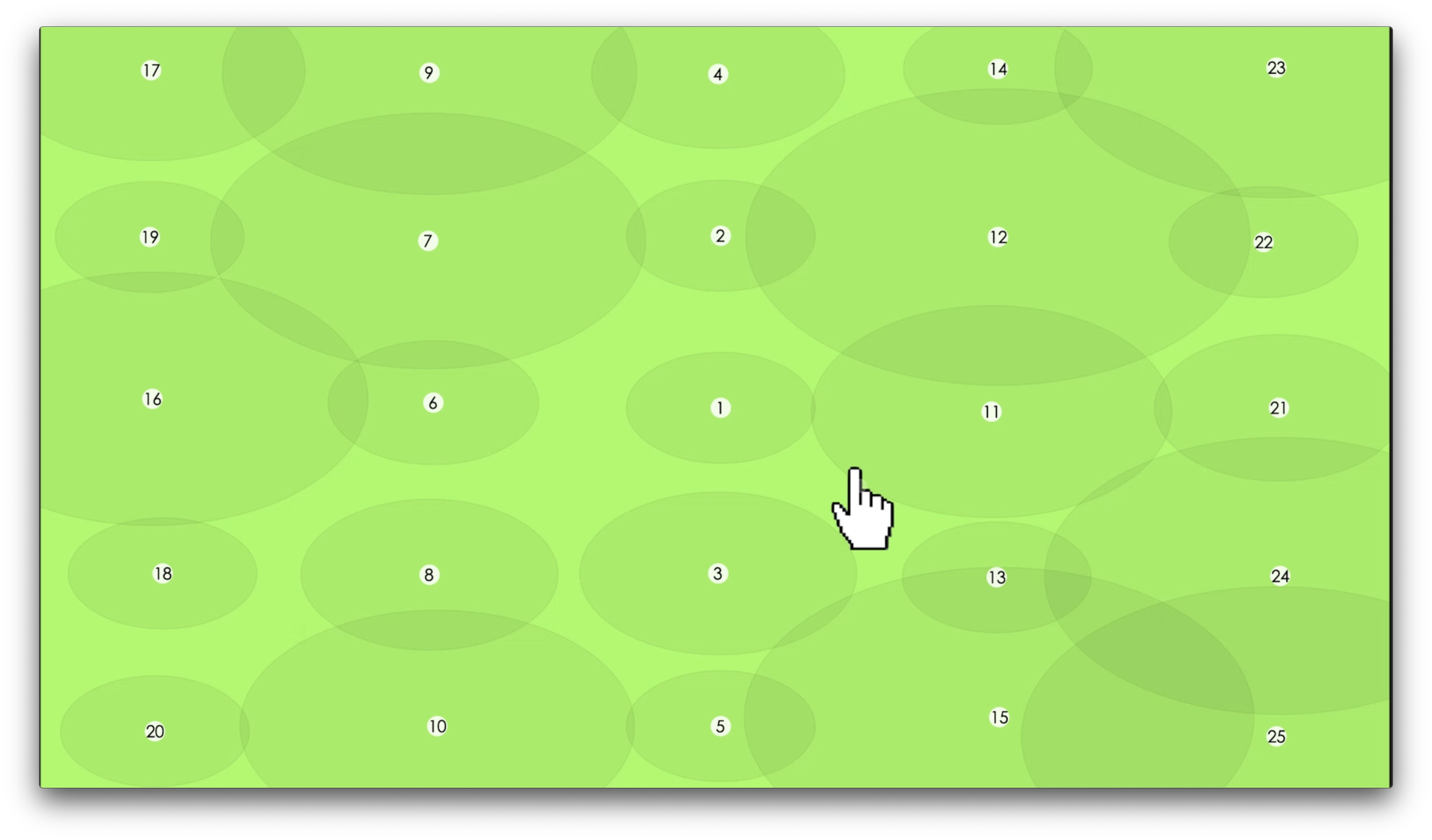

I wanted to do some kind of #yearinreview videowall thingy with a mixer matrix that could scrub across the sound of each clip… so I built this weird setup:

the video wall was created in Final Cut and the

Mixer Matrix was created in Max and

then I combined the 2 systems together..

I thought of several ways to do this in realtime with Jitter or VJ software but 25 simultaneous video and audio is quite taxing on the system... so just got er done this way until I learn more video ninja techniques

Acoustic guitar and Hologram Electronics Microcosm.

Farewell 2021, been a blur. Happy Noise Year 2022

Just a little noodle on the guitar sent through the always dreamy microcosm, also blending some audio-visual blur/RGB video offset thing with the wet/dry audio mix parameter. Its always fun to match the video effect with the audio effect, in this case the video effect was completely inspired and driven by the audio

Christmas Carol of the Bells Remix and random re-sequencing with MIDI interactive tree scene and audiovisual snowflakes❄️🔔🎄🎹❄️Happy Holidays

MIDI Sequencing and Re-Sequencing happening in both Ableton Live and on the OP-Z.

Essentially chopping the original MIDI for Carol of the Bells into A and B and C parts assigned across instruments, programmed some parts back onto OPZ and randomizing all the parts for a generative experience.

Most voices all from the OP-Z, additional accompaniment from KORG NTS-1 Digital sent through Microcosm

Snowflakes driven by Audio from Resolume. The snow flakes however seem to be problematic for video streaming services to compress properly.. my local capture is quite good but the streamed versions not as good.. download the original capture here if you like

An old and silly Christmas experiment controlling 2D puppets in Adobe Character Animator with MIDI, face tracking and text to speech synthesized singing using Splash Pro tech

The first video is the result of sequencing the scene with MIDI

This video has some more detailed explanation of the inspiration and execution

A few exercises synthesizing, crafting and filtering noise to model some Waves and Wind

I shot these videos from a recent trip to Maui and thought some synthesized sound design against the video capture would be fun and educational blog post..

First experiment is exploring noise filtering in Max8

Second experiment is exploring noise filtering and performance modulation using Modular Synthesis Hardware:

4 channels of noise in ER301 with internal filters being modulated by unsynced Batumi LFO, split signals through QPAS, mixed through Roland531 and sent through H9. A bit more abstract feeling

Third experiment is exploring noise filtering and performance modulation using DSP Motion. This was fun actually drawing waves to generate sound of synthesized waves

The wind was angry that day my friends, like an old man sending back soup in a Deli.. even with the wind jammer on my Sony PCMD50 lol..

I'm always recording the beach and the birds and the wind when vacationing in Maui, such a pleasant and relaxing ambience. These videos show some different perspectives I happened to capture on video as well.